The announcement of the results and the awards ceremony for the TRON Programming Contest 2025 took place at the 2025 TRON Symposium – TRONSHOW – on December 11, 2025.

We received roughly 64 entries from Japan and abroad, 31 of which advanced to the final submission stage. The judging panel evaluated each work holistically—considering convenience, practicality, originality, and future potential—with particular emphasis on the level of completion for μT-Kernel 3.0 compatibility, alignment with the AI-utilization theme, and the authors' commitment to open-source release. In the end, 11 works were selected in total, including four Excellence Awards and additional Prize and Encouragement Award winners.

Review by the judges

Ken Sakamura (Chief Judge)

Chair of TRON Forum

IEEE Life Fellow / Golden Core Member, IEEE Computer Society

Professor Emeritus of the University of Tokyo

This year’s TRON Programming Contest was held under the theme, “TRON × AI – Utilizing AI,” and challenged participants to explore how real-time operating systems and artificial intelligence — two technologies that may seem distant at first glance — can be effectively combined and implemented in practical systems. As a result, we received many highly motivated and creative submissions from both Japan and abroad. In the RTOS Application (General) Division, “LGX-Shield: Edge Monitor for Real-Time Bridge Anomaly Detection Using Acceleration × AI” stood out as an exceptional work with a clear objective directly connected to social infrastructure. From FFT analysis and feature extraction to real-time inference processing, the implementation demonstrated a high level of technical proficiency. The effort to go further by developing original device drivers and network processing was also highly commendable. Similarly, in the RTOS Middleware Division, “Self-Evolving LoRa Communication Library ‘LoRabbit’” showcased outstanding completeness as an adaptive, AI-assisted communication library. The way it fused LoRa communication with AI optimization, while making full use of µT-Kernel’s characteristics, left a strong impression on the judges.

On the other hand, we also saw great potential in the projects using micro:bit. Despite its limited hardware capabilities, many participants made clever use of its portability and low power consumption, resulting in creative applications such as weather prediction, motion analysis, and TinyML implementation. In particular, “µT-Kernel × AI Learning Environment - Learn and Experience Real-Time OS and AI with Line Follower Robot” and the “Motion Analysis Tool for Wearable that runs µT-Kernel” demonstrated significant educational value and can be considered excellent examples contributing to the future development of engineers.

Among the international entries, we also saw ambitious challenges involving advanced technologies such as human detection using YOLO and SLAM. These works clearly demonstrated that the potential of combining TRON RTOS and AI is expanding beyond national borders.

That said, there were a few projects where the ideas were quite promising, but the implementation could not be fully completed in time, or where the purpose of using AI was not clearly defined. Implementing both AI and real-time embedded control together requires solid design skills and careful planning. We strongly encourage participants to build on this experience and challenge again with even more refined and complete systems.

AI does not belong only in the cloud. Its true strength lies at the edge — where sensors and operating systems are closely tied to the real world. With the addition of AI as a new driving force to the long-standing vision of “connecting the real world with computing" of TRON Project, this year’s contest has taken an important step toward the future.

We sincerely look forward to witnessing even more advanced and innovative challenges from all of you in next year’s contest.

Takashi Goto

Vice President Strategy, Mergers & Acquisitions, Infineon Technologies Japan K.K.

This year, we received entries not only from Japan but also from many countries around the world. On behalf of Infineon Technologies Japan, I would like to express my deepest respect to all participants.

The theme for this year, “TRON × AI,” was a highly challenging one, combining real-time OS with AI. I understand that while many of you worked hard to bring your diverse ideas to life, there were moments when technical difficulties made it hard to realize your vision as you had hoped.

However, next year, we expect to see even more diverse platforms and enhanced development tools, which will further improve the environment for turning your ideas into reality. Infineon is proud to support such evolution by offering a wide range of microcontroller products, including PSOC™ Edge, AIROC™ Connected MCU, and the highly regarded AURIX™ family for industrial and automotive applications. These platforms support AI/ML, motor control, and power conversion, and feature low-power, high-performance AI inference, robust security, wireless connectivity with Wi-Fi 6 and Bluetooth LE, and rich development tools such as ModusToolbox™. Combined with TRON OS, they enable the creation of more efficient and secure systems. In particular, PSOC™ Edge is an ideal platform for quickly implementing edge AI in a wide variety of IoT applications, such as smart homes and industrial equipment.

This contest serves as a bridge to the future. I was deeply impressed by the enthusiasm of those who chose Infineon products for their challenge. We expect that you will lead future innovation and deliver new value to the world. Once again, I congratulate all the award winners, and I look forward to seeing the wonderful products you will create in the market in the years to come.

Paolo OTERI

STMicroelectronics K.K.

Microcontroller・Digital IC+RF product group

Asia Pacific region Vice President

Thank you for participating in the 2025 TRON Programming Contest. We are impressed by the variety of project entries, which contribute to enhancing product development and the enriching ecosystem for STMicroelectronics' STM32 family.

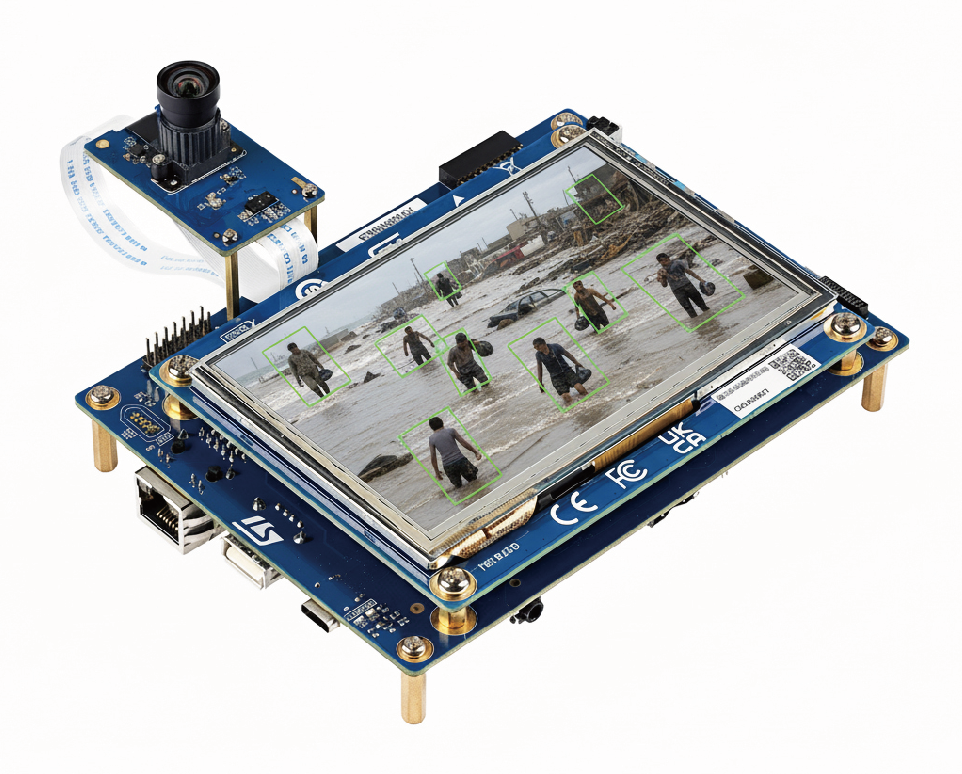

A standout entry in the "RTOS Application Student Division" is the "RescueBot" project. Utilizing ST's flagship STM32N6 microcontroller, this embedded AI model detects the number of people present in real-time from camera images. The project has been published as open source on GitHub, and we anticipate it will be a valuable resource for embedded AI developers.

We also noted the advanced use of microcontrollers, development environments, and µT-Kernel across all entries, which made the judging process challenging. We believe this contest has generated many innovative projects that provide excellent guidance for future application development.

Congratulations to all the winners, and our sincere thanks to every participant.

ST remains committed to expanding our product line, development environments, and support to ensure that more users can seamlessly integrate STM32 family into their system development.

Akihiro Kuroda

Vice President, Embedded Processing Marketing Division, Embedded Processing Product Group, Renesas Electronics Corporation

Thank you to everyone who participated in the TRON Programming Contest 2025, and congratulations to all of our winners.

This year’s theme, “TRON × AI: Utlizing AI,” invited participants to explore how artificial intelligence can be integrated into embedded systems. We truly appreciate the effort and creativity you demonstrated in taking on this challenge.

While some projects were not able to fully implement AI, many showed great potential. Had they been completed, they could have been truly remarkable. We hope these ideas will inspire even more innovative submissions in future contests.

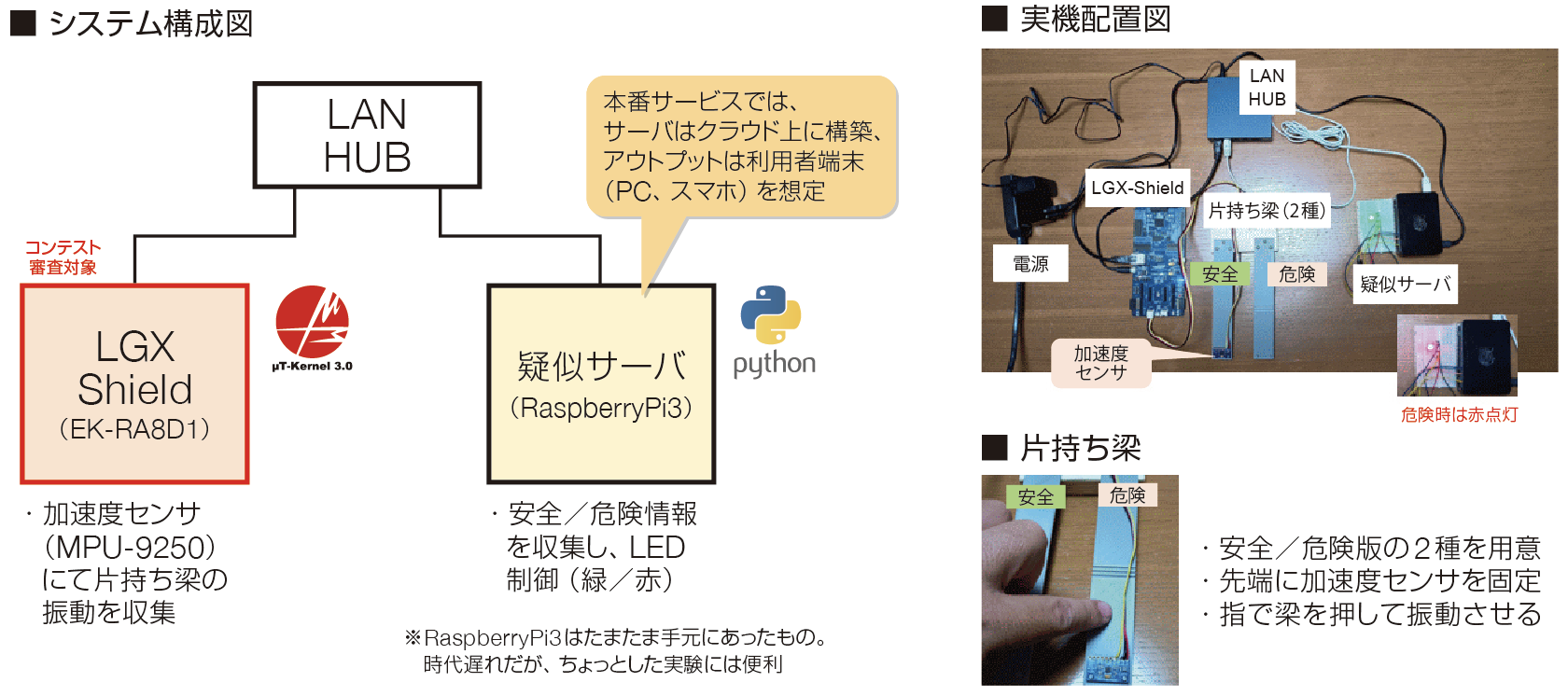

In the application category, the project “LGX-Shield: Edge Monitor for Real-Time Bridge Anomaly Detection Using Acceleration × AI” impressed us with its practical approach to infrastructure monitoring using Renesas’ EK-RA8D1 board. In the RTOS middleware category, the “Self-Evolving LoRa Communication Library ‘LoRabbit’” demonstrated not only a clever combination of µT-Kernel and AI but also thoughtful design, including custom device drivers and API preparation. These projects earned high praise for their technical sophistication and forward-thinking approach.

Renesas Electronics will continue to provide MCU ranging from high-end to low-end + low-power consumption, offering products that are easy to utilize for real-time applications and AI, and supporting your system development.

Akira Matsui

Sub Chair of T3/IoT WG, TRON Forum

President, Personal Media Corporation

Thank you very much for submitting so many works to the TRON Programming Contest 2025. Congratulations to all the award winners of the contest. I would also like to commend all the participants for their efforts.

This year's theme was "Utilizing AI." While embedded real-time operating systems like µT-Kernel and AI represented by ChatGPT and LLMs are both important computer technologies, at first glance they do not seem particularly related. What can be achieved by combining a real-time OS with AI? We awaited the submitted works with a mixture of such uncertainty and anticipation.

When we actually opened the submissions, we received many entries from both domestic and international participants, and felt an even greater excitement than last year's contest. In terms of the combination of real-time OS and AI, many applications took advantage of the compactness of the OS and the portability of the boards, with a notable trend toward practicing so-called "edge AI"—utilizing AI locally in the field, away from the cloud.

At Personal Media Corporation, we have equipped micro:bit, an ultra-compact computer used for elementary school programming education and other purposes, with µT-Kernel, and provided it as one of the target boards for the contest(*1). While we believe micro:bit is a compact and uniquely interesting computer, it is admittedly underpowered compared to the latest microcontrollers with AI accelerators provided by various semiconductor companies, and cannot perform AI processing with heavy loads. However, it offers advantages such as palm-sized portability and AA battery operation, and we thought that the key point for contest entries would be creative ideas on how to utilize AI with these features. As a result, all the works that won higher-ranking prizes using micro:bit took advantage of battery operation and portability, demonstrating helpful insights for AI utilization on micro:bit and real-time operating systems.

On the other hand, there were several works that, despite having interesting ideas at the planning stage, became too large in development volume to meet the deadline and were submitted with only some of the originally planned functions implemented. To effectively utilize AI in this contest requires skills in both AI and real-time embedded control—what might be called a "two-way" approach in today's terms—so it is natural that the development period would be lengthy. We hope that participants will continue development toward next year's contest and rechallenge us with fully completed works.

We look forward to next year's contest.

(*1) IoT edge node practice kit/micro:bit(http://www.t-engine4u.com/products/ioten_prackit.html)

RTOS Application General

Yoshiaki SUDO

This compact monitoring device measures "vibrations" of civil engineering infrastructure such as bridges, enabling AI to instantly determine safety or danger. It collects vibration data from accelerometers 100 times a second, extracts characteristics of vibration through frequency analysis (FFT), and displays results via LED after AI makes on-site judgments.

In maintenance workplaces where final judgment often relies on “experience and intuition,” this system visualizes conditions through numerical data, enabling anyone to make judgments.

Furthermore, although it was our first-time AI programming, we utilized generative AI (ChatGPT) to overcome the barrier of specialized AI knowledge, experiencing a “new collaborative development style between humans and AI.”

Though small-scale, it demonstrates the potential to tackle societal challenges.

github

https://github.com/yossudo/lgxs

Ryuji MORI

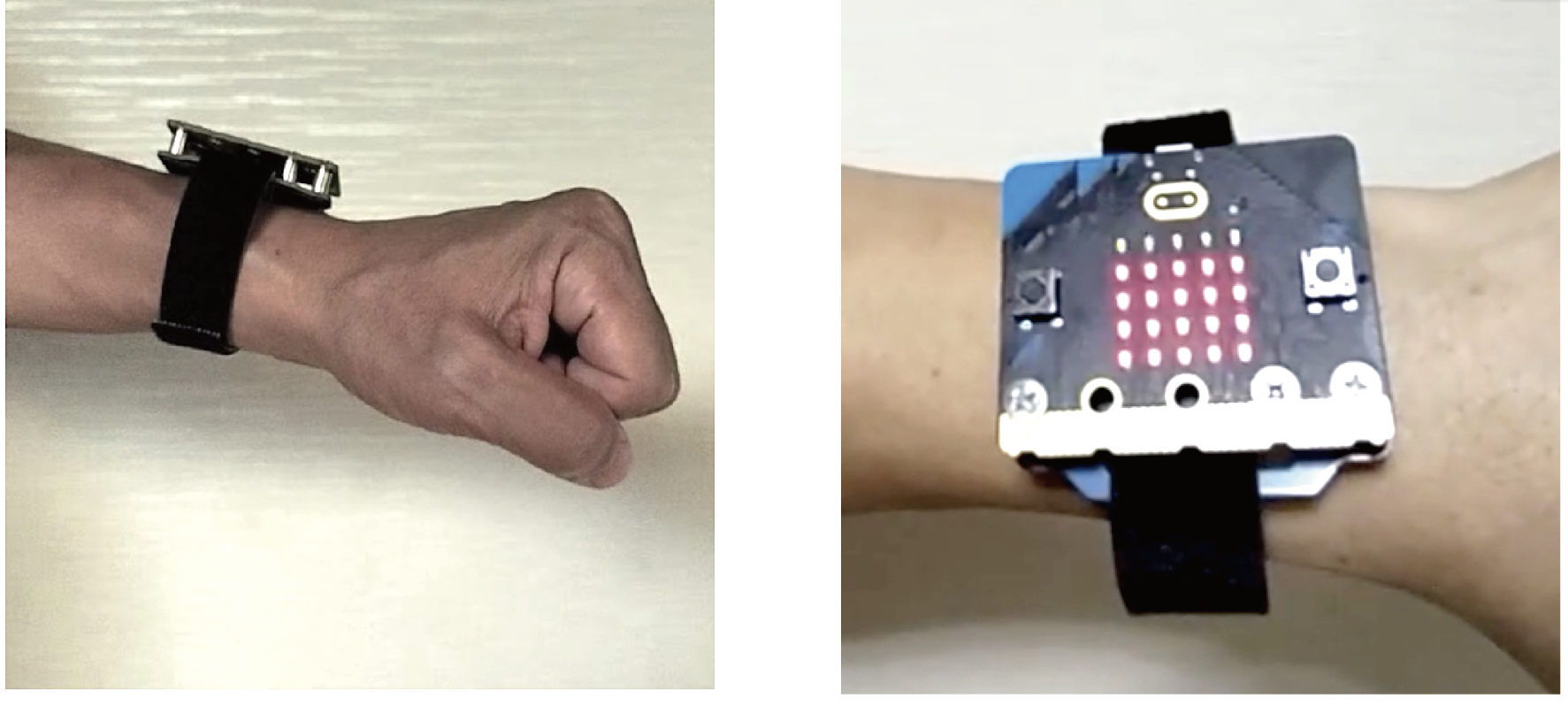

This motion analysis device is worn on the arm, senses your movements, and instantly displays differences from the sample reference movement via LED indicators. It allows you to intuitively identify areas for improvement in your movements during rehabilitation or physical exercise practice.

Designed to operate accurately with tiny batteries and limited components, the tool focuses on being “lightweight, simple, yet practical.” Furthermore, it offers flexibility for future expansion, such as tracking other body movements, enabling remote recording and monitoring, and tracking the movements of multiple people. Future developments may include AI's learning movement patterns of a particular user to provide personalized optimal advice.

github

【lsm303agr-utkernel】

URL: https://github.com/mnrj-vv-w/lsm303agr-utkernel

【WearableMotionAnalyzer-uTKernel】

URL: https://github.com/mnrj-vv-w/WearableMotionAnalyzer-uTKernel

【wearable-motion-visualizer】

URL: https://github.com/mnrj-vv-w/wearable-motion-visualizer

Yoshiyuki Shibukawa

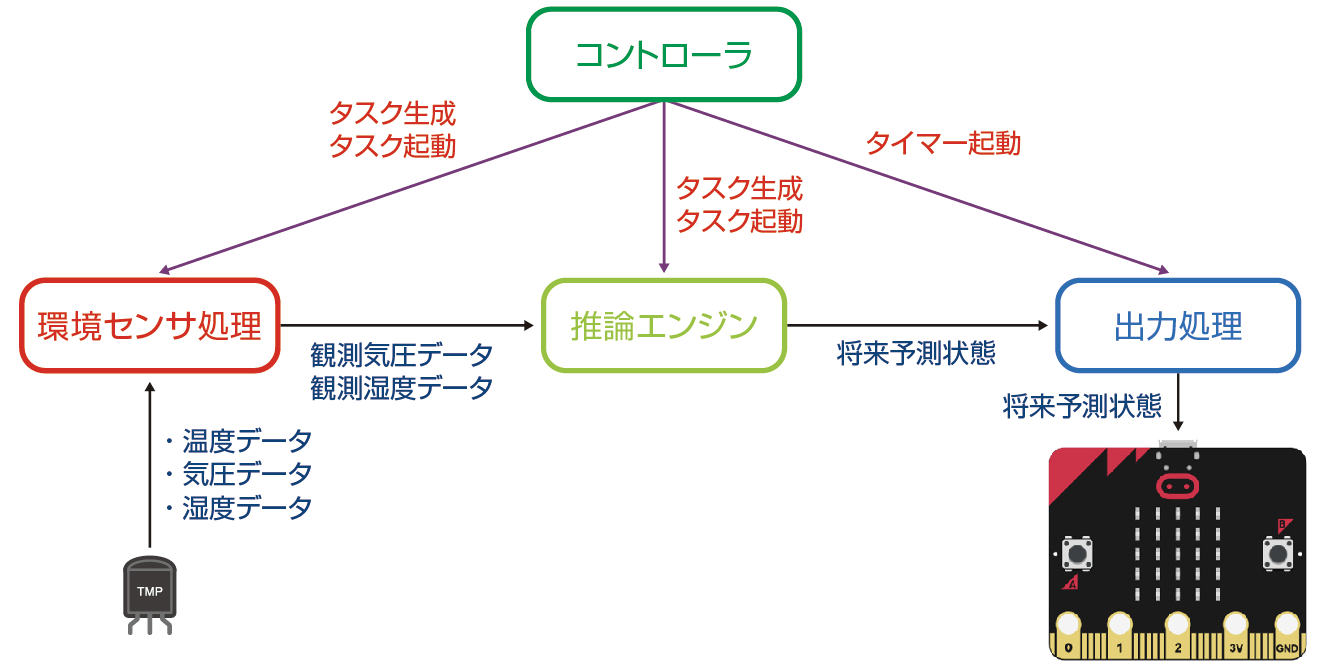

The environmental data inference system “AMAGOI” predicts weather conditions based on observation data acquired from environmental sensors for a period of time.

Weather condition is predecited using actual measured values of barometric pressure and humidity, along with future forecast values calculated by an Extended Kalman Filter (EKF).

The hardware uses the micro:bit microcontroller board and the enviro:bit environmental sensor.

Regarding the software, the sensor measurement acquisition process (environmental sensor processing), prediction (inference engine), and display (output processing) are implemented as independent tasks using C programming language.

github

https://github.com/shibukawa-yoshiyuki/AMAGOI_for_microbit

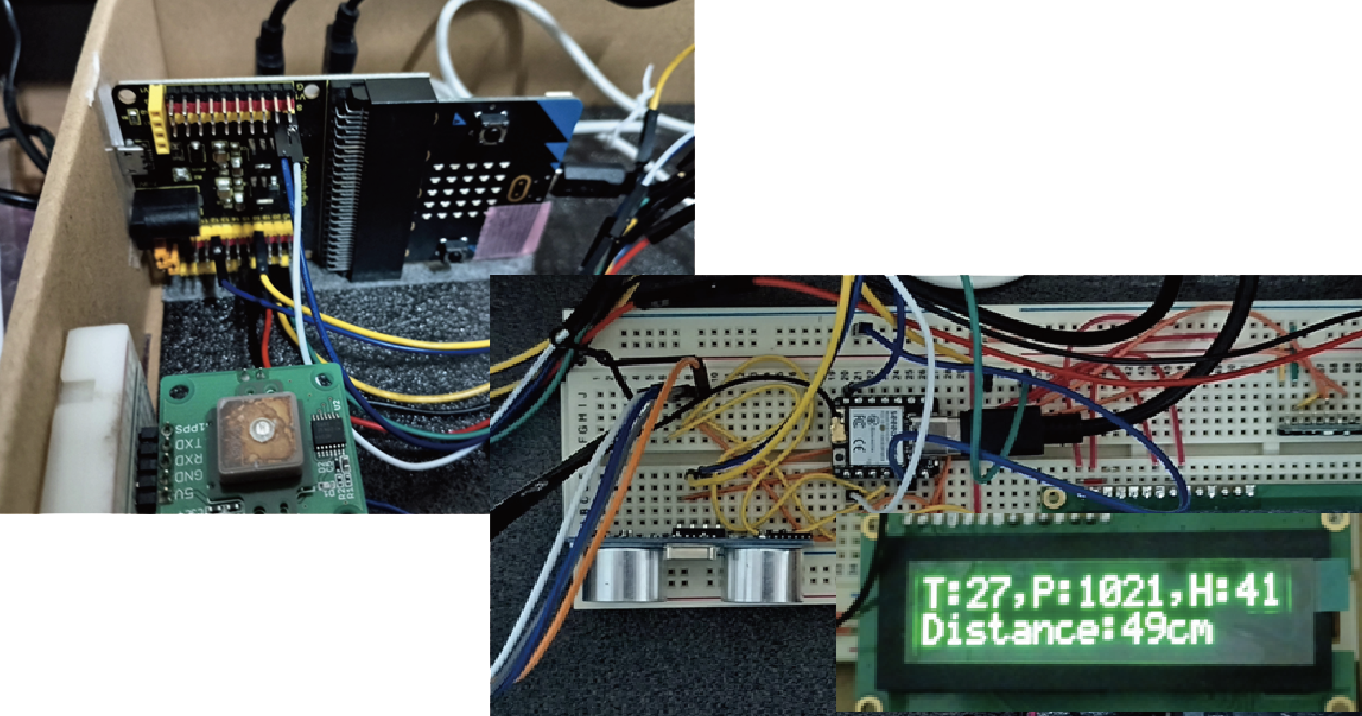

Takeichiro SEKIYA

This system guesses and displays the current location based on distance to obstacles, weather conditions (temperature, humidity, atmospheric pressure), and geographic coordinates. The development focus is to enable elderly people and people with disabilities to avoid collisions by understanding distances to obstacles while walking, and to detect sudden weather changes like heavy rain in advance to take safety precautions. Furthermore, when the user does not grasp the current location, displaying the present position is considered useful for hazard anticipation. Multiple µT-Kernel tasks run on the micro:bit, displaying information from ultrasonic distance sensors and temperature/humidity/barometric pressure sensors on the OLED. Additionally, a sub-board obtains guessed place names generated from GPS data by AI, communicates with the micro:bit via UART, and micro:bit displays the results on the OLED.

github

https://github.com/softwaredproducts/TRONContest2025

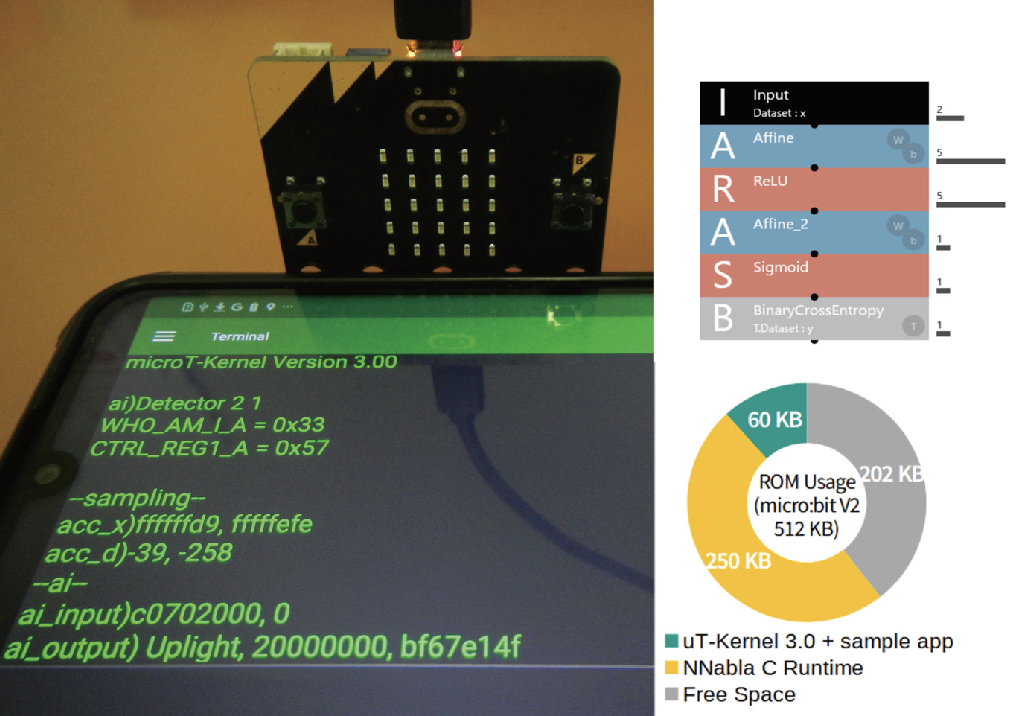

Tatsuya YUZAWA

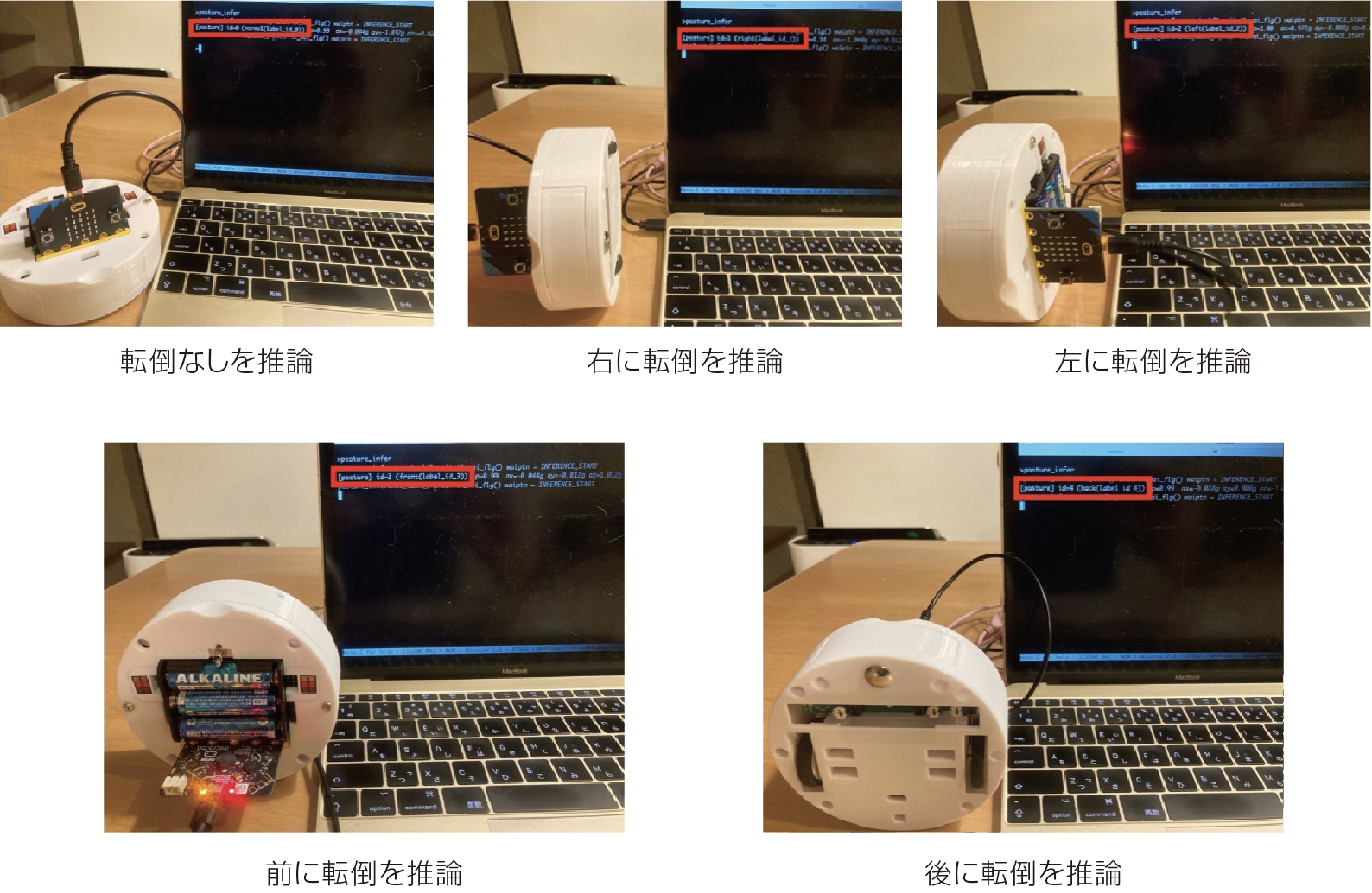

This work provides a porting layer to run TinyML, one of the edge node AI solutions, in a micro:bit + µT-Kernel 3.0 environment.

For TinyML implementation, it adopts the open-source execution environment NNAbla C Runtime (nnablart) from Sony's Neural Network Libraries (nnabla).

The µT-Kernel 3.0 source was modified, and Gemini by Google, a generative AI, was consulted for implementing the floating-point arithmetic library.

The sample posture detection application implemented using the micro:bit's built-in accelerometer uses 310KB of ROM with nnablart's unused functions included. Combined with µT-Kernel 3.0's small footprint, this leaves ample room for application development and tuning.

Porting to BTRON is also under consideration.

github

RTOS Application Students

Supun Navoda Gamlath / Shamila Jeewantha / Chathura Gunasekara

University of Moratuwa

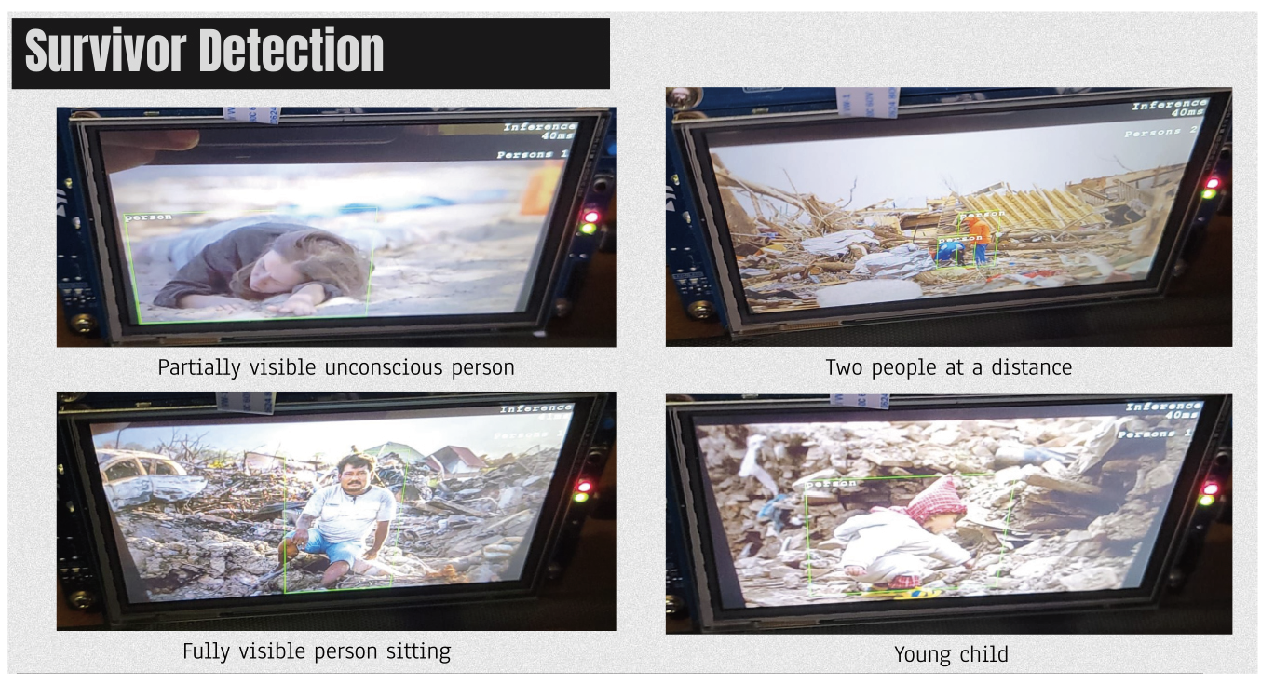

Real-time On-Device AI Framework for Post-Disaster Survivor Identification on STM32N6570

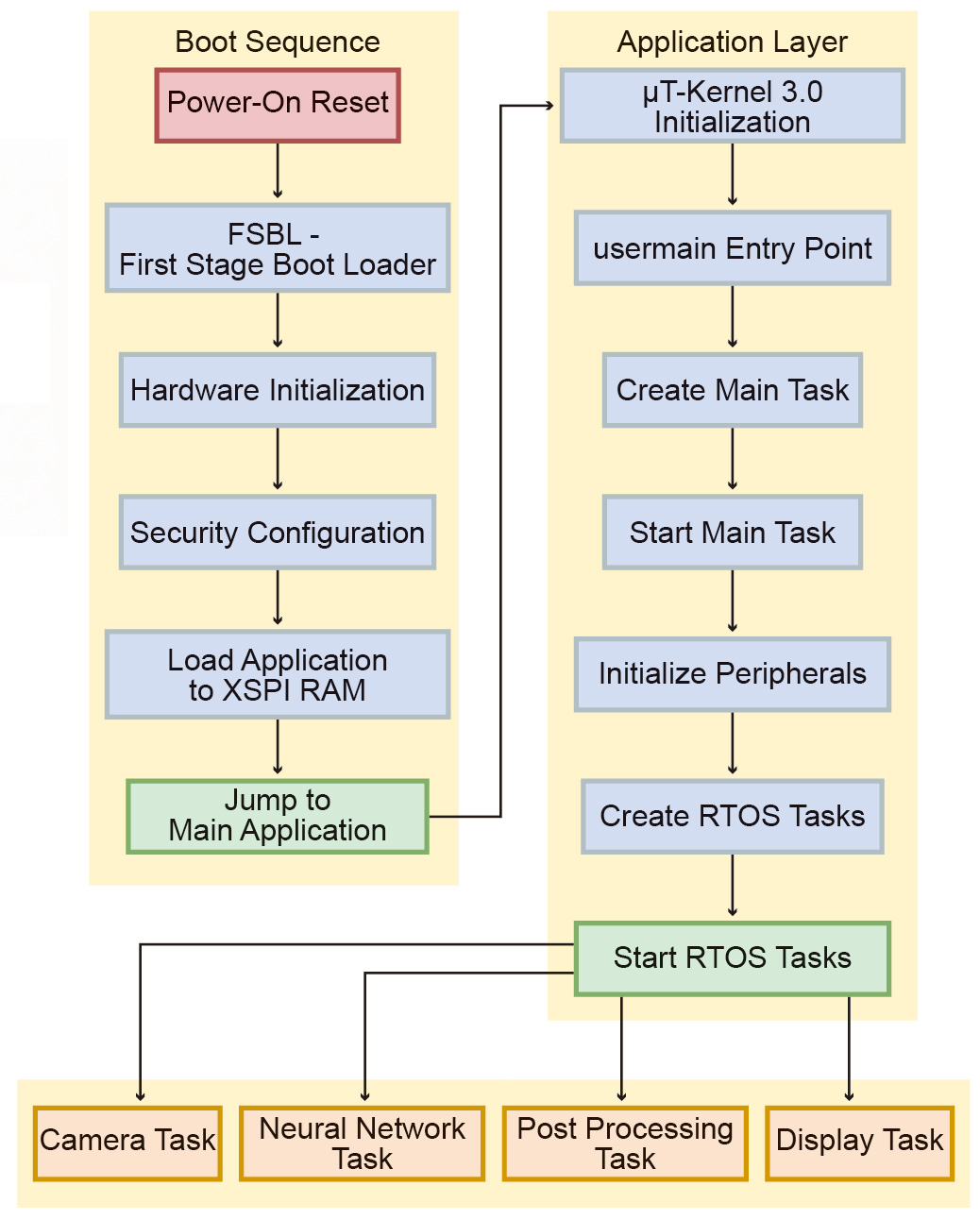

This project presents RescueBot, a real-time embedded AI framework designed for autonomous post-disaster survivor identification. Implemented as a µT-Kernel 3.0 RTOS application on the STM32N6570 Discovery Kit, it demonstrates how a neural network for computer vision can operate efficiently on a microcontroller without reliance on cloud connectivity for inference.

The system integrates a YOLO-X Nano neural network model, optimized and quantized for STM32’s Neural-ART Accelerator NPU to detect human survivors in real-time camera input. Inference is executed at up to 25 FPS, using the on-chip image signal processor and MIPI-CSI camera interface. Detected individuals are tracked with a Kalman filter, and structured detection results are transmitted via UART in machine-parseable JSON format, enabling easy integration with external systems such as a robot for autonomous search and rescue operations.

Using µT-Kernel 3.0, all operations are managed as deterministic real-time tasks with semaphores and message queues. The STM32N6’s powerful hardware allows for stable, low-latency execution of this computer vision pipeline entirely on-device.

This project demonstrates the feasibility of deploying deep learning models for life-saving applications directly on embedded platforms paving the way for future intelligent search and rescue solutions.

github

https://github.com/supungamlath/STM32N6_Survivor_Detection

Adarsh A / Aayush S / Asrith S / Adithya A

Team V.I.S.I.O.N. (Visual Inertial System Integrating On-board Navigation)

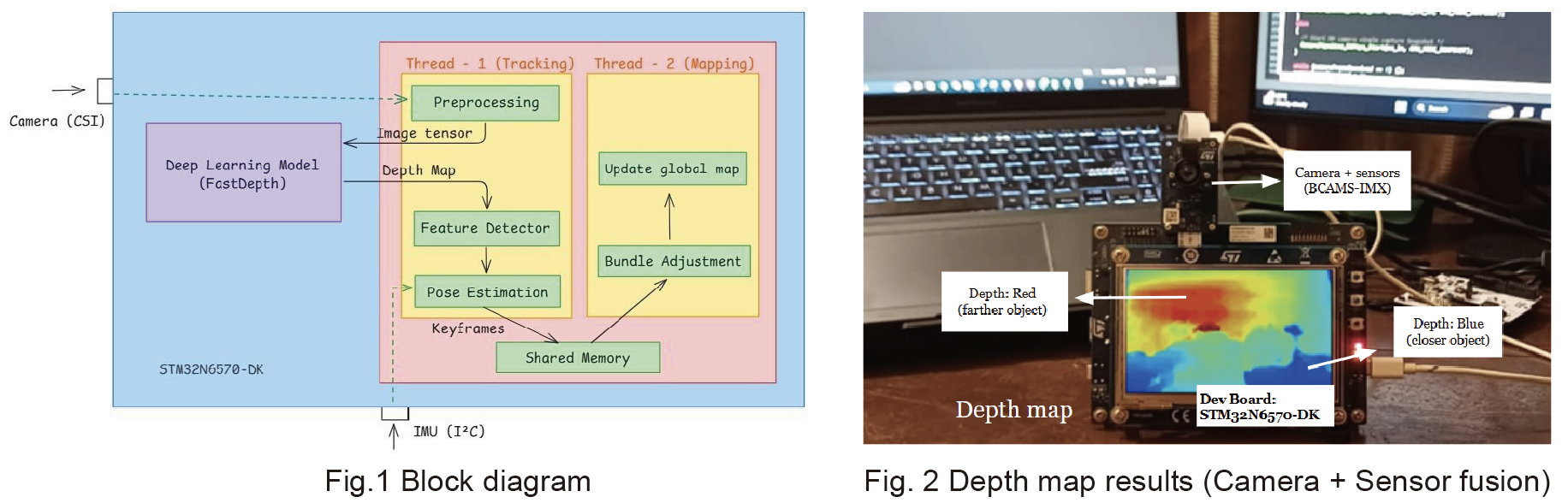

Integrating Edge AI with the real-time precision of TRON’s µT-Kernel 3.0, this project demonstrates a novel, cost-effective architecture for Visual Inertial SLAM on the STM32N6570 development board. While traditional autonomous systems rely on bulky, cost-prohibitive LIDAR for spatial understanding, our approach utilizes the board’s NPU to generate "Synthetic LIDAR" data. This is achieved by fusing camera depth maps with sensor data from a BCAMs-IMX module, creating a robust perception layer without the expensive hardware.

The system leverages the STM32N6’s Neural Processing Unit to accelerate depth estimation, interpreting visual input in real-time. Crucially, µT-Kernel 3.0 manages these intensive operations, ensuring the strict timing requirements of sensor fusion are met with zero latency. By successfully establishing this high-speed sensory foundation, the project effectively democratizes autonomous navigation technology, allowing compact embedded devices to perceive their environment with LIDAR-like precision.

github

https://github.com/Adithya1435/slam-rtos-tron

Kariuki Samuel Kiragu / Zuocheng Feng

GIFU UNIVERSITY

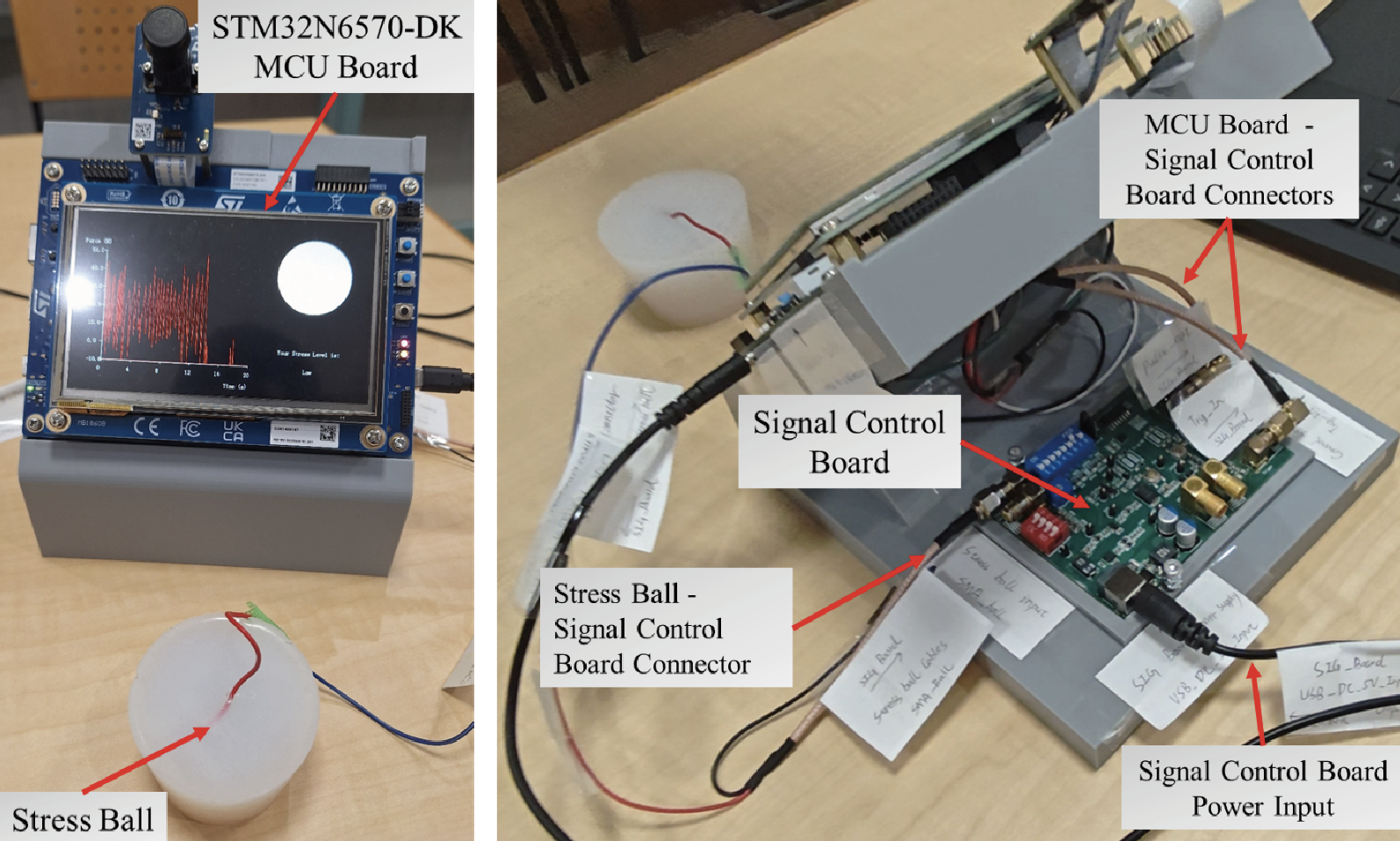

This project proposes a “Smart Stress Management Ball” that integrates tactile sensing with embedded machine learning. Highly reliable real-time processing is achieved using the STM32N6570-DK and the µT-Kernel 3.0 RTOS.

Through innovative structural and circuit design, tactile signals are converted into pulse-width modulation signals directly measurable by the STM32, significantly simplifying the system and reducing costs. The STM32 measures pulse width and estimates the input tactile signal using a viscoelastic compensation model.

Regarding machine learning, K-means groups the data into meaningful clusters as Low, Medium and High stress. These clusters are used as pseudo-labels to train a Random Forest model, which estimates stress levels based on pressing characteristics.

The LCD displays the estimated stress level in real time. The project prototype shows how soft tactile sensors, embedded systems, and edge AI can be combined to realize an intuitive, real-time stress monitoring device.

PROTOTYPE STRESS BALL HARDWARE OVERVIEW

PROTOTYPE STRESS BALL HARDWARE OVERVIEW

RTOS Middleware

Yuichi KUBODERA

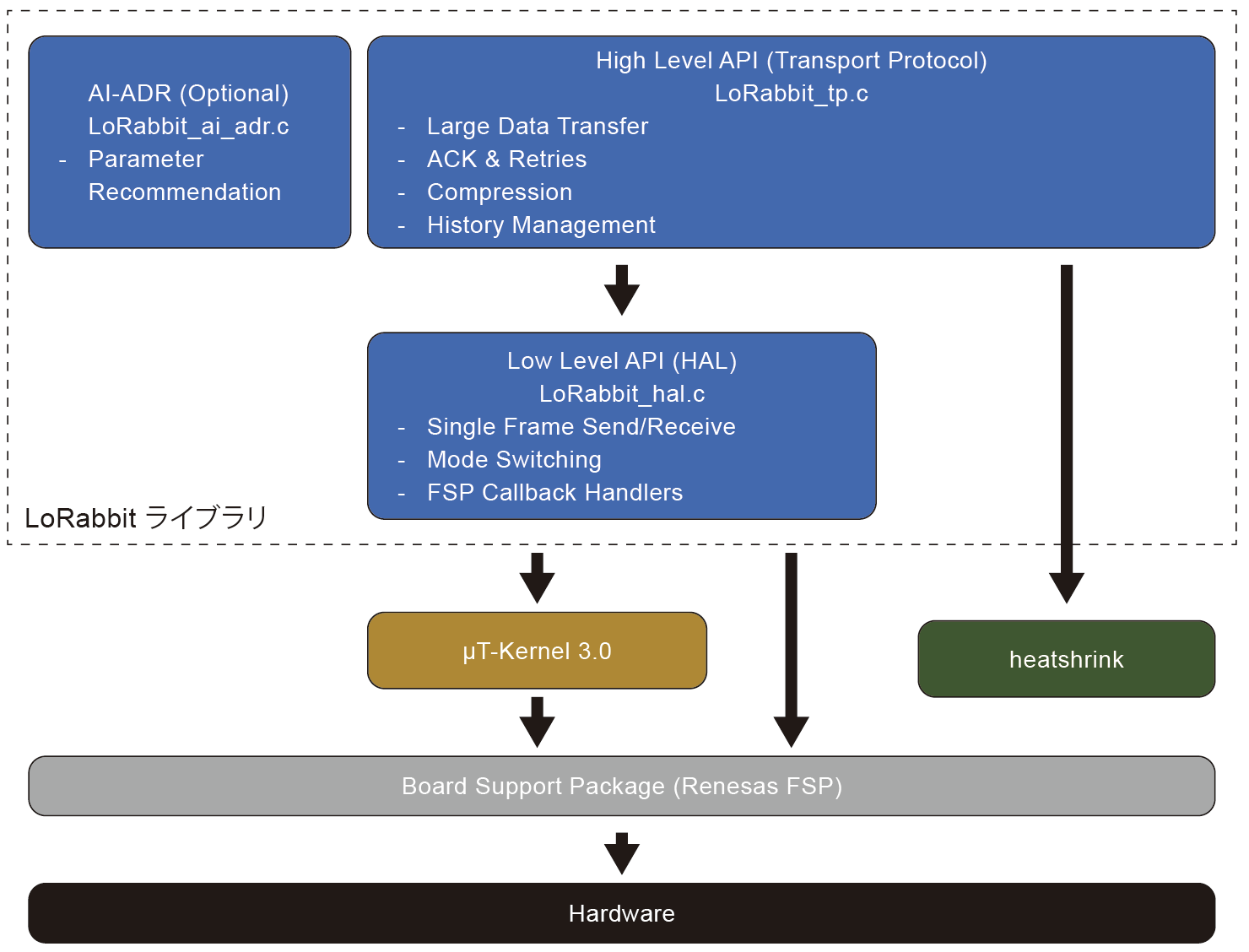

LoRa communication, which is widespread in IoT, faces challenges such as complex configuration and difficulties in transferring large amounts of data.

This work, “LoRabbit,” focuses on solving these challenges using the power of “µT-Kernel” and “AI.” It is a LoRa communication library developed for Renesas RA microcontrollers that runs µT-Kernel 3.0.

Leveraging the synchronization mechanism of µT-Kernel 3.0, it efficiently performs data compression and highly reliable segmented transmission. Its major feature is the “Adaptive Date Rate" function provided by AI. (AI-ADR).

AI learns from communication history to “self-evolve,” automatically optimizing communication. Developers are freed from complex communication control tasks, and can focus on stable application development.

github

https://github.com/men100/LoRabbit

Shigeru MOTOTANI

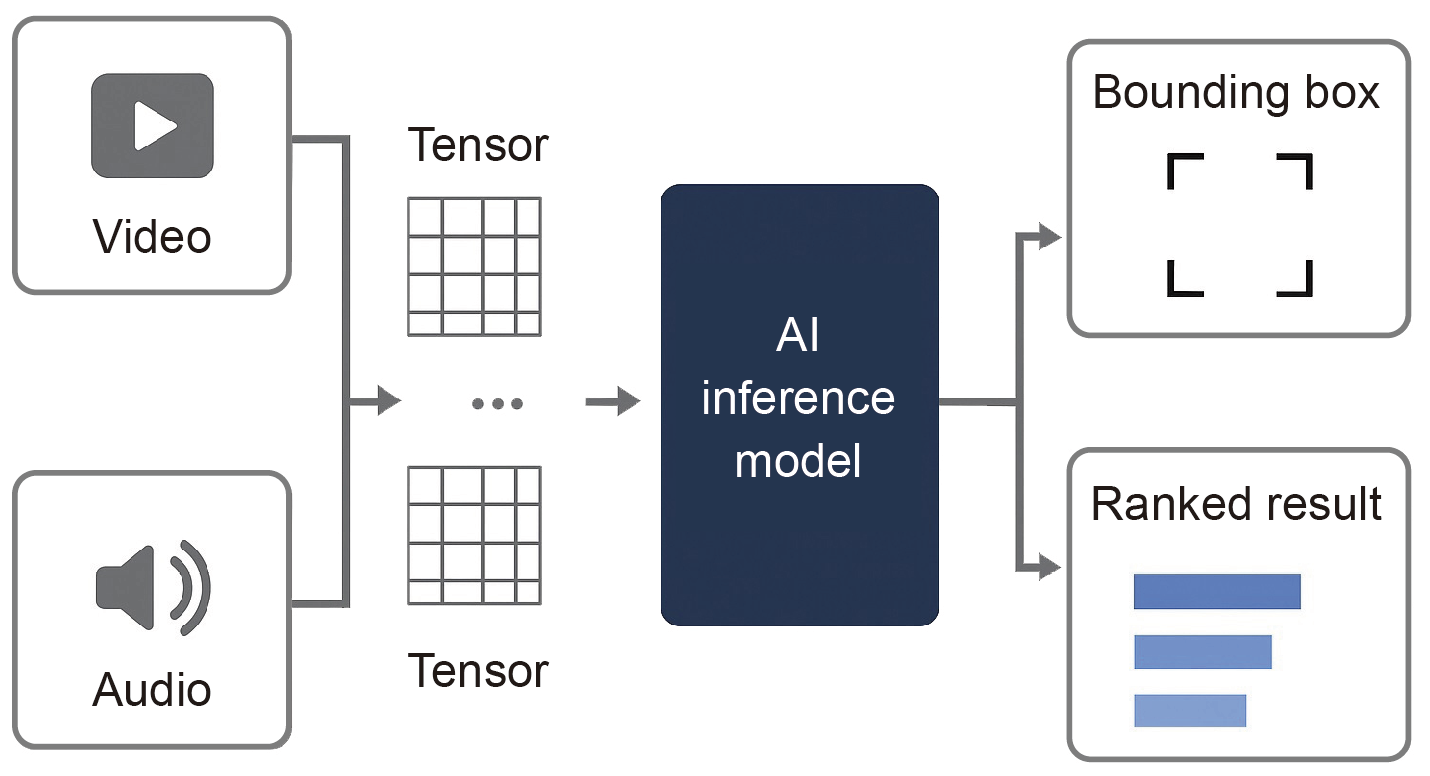

Yoridori is a library for ”Pick and Select" I/O Functions for AI Integration and helping the interpretation of output.

Using AI in embedded devices requires extensive specialized knowledge, including image and audio processing, understanding AI model input/output formats, and programming techniques for high-speed processing. However, these skills are not widely available and often become barriers to introducing AI into embedded devices.

We believe overcoming this challenge is crucial for advancing the practical implementation of AI in embedded devices.

Yoridori aims to provide practical input/output processing functions compatible with renowned AI models.

Application developers can focus on the core aspects of AI development thanks to Yoridori support.

Development Environment / Development Tool

Koji ABE

One can learn µT-Kernel and Edge AI using × AI Learning Environment - Learn and Experience Real-Time OS and edge AI with Line Follower Robot that uses micro:bit.

Three major features are as follows.

- Line Follower Robot

We can earn the fundamentals of robot control in a well-balanced way. - Reinforcement Learning

Experience the reinforcement learning process and its results firsthand through the performance of line follower robot. - Robot Fall Detection Inference

You can create AI models and infer fall detection using simple edge AI. AI models are created using Sony's Neural Network Console, which outputs C source code. You can perform fall detection inference by running the generated source code on micro:bit.